Ning Liu

Applied Scientist at Amazon, Bellevue, WA.

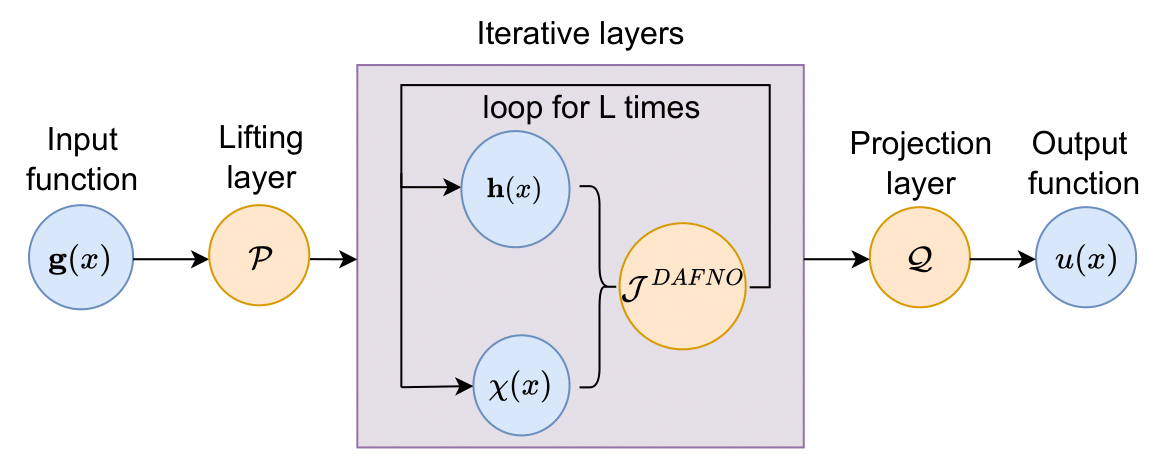

I am a Machine Learning Scientist with 7 years of experience developing and deploying innovative machine learning solutions at scale to address challenges in LLM post-training, reasoning and building agentic solutions, as well as advancing next-generation design in complex physics-based scenarios. I have a strong focus on LLMs and attention-based foundation models, generative models, meta learning, disentangled/causal representation learning, and neural operator learning.

I earned my Ph.D. from the University of Michigan - Ann Arbor, and am actively collaborating with researchers across academia. Prior to joining Amazon, I was a Principal Machine Learning Scientist at Global Engineering & Materials, Inc. I am passionate about advancing the field of machine learning, and my work has been published in top-tier conference venues such as ICML, NeurIPS, and AISTATS.

Hit me up if you are interested in discussing or exploring potential collaboration opportunities!

news

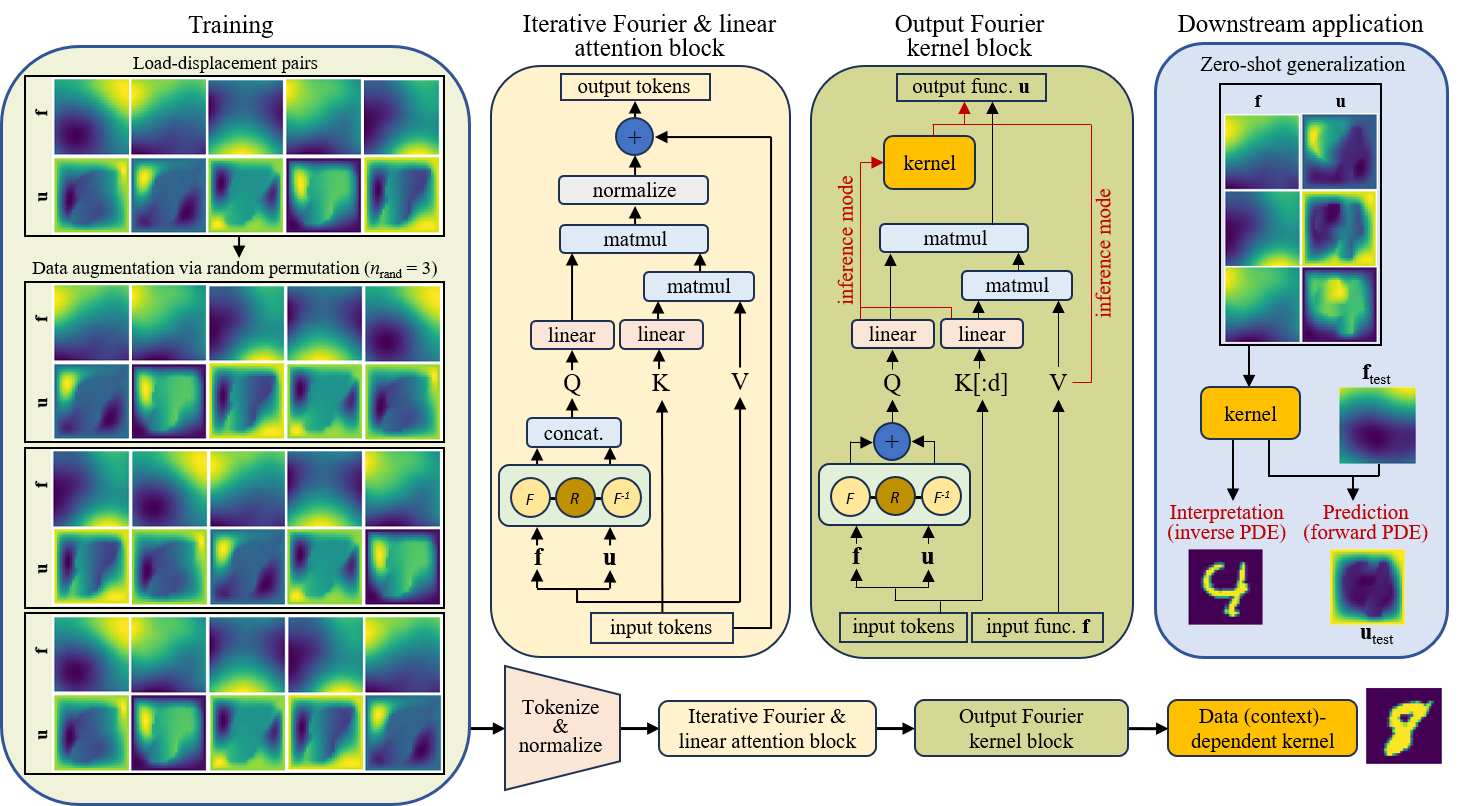

| May 01, 2025 | Our paper Neural Interpretable PDEs: Harmonizing Fourier Insights with Attention for Scalable and Interpretable Physics Discovery has been accepted by ICML2025. |

|---|---|

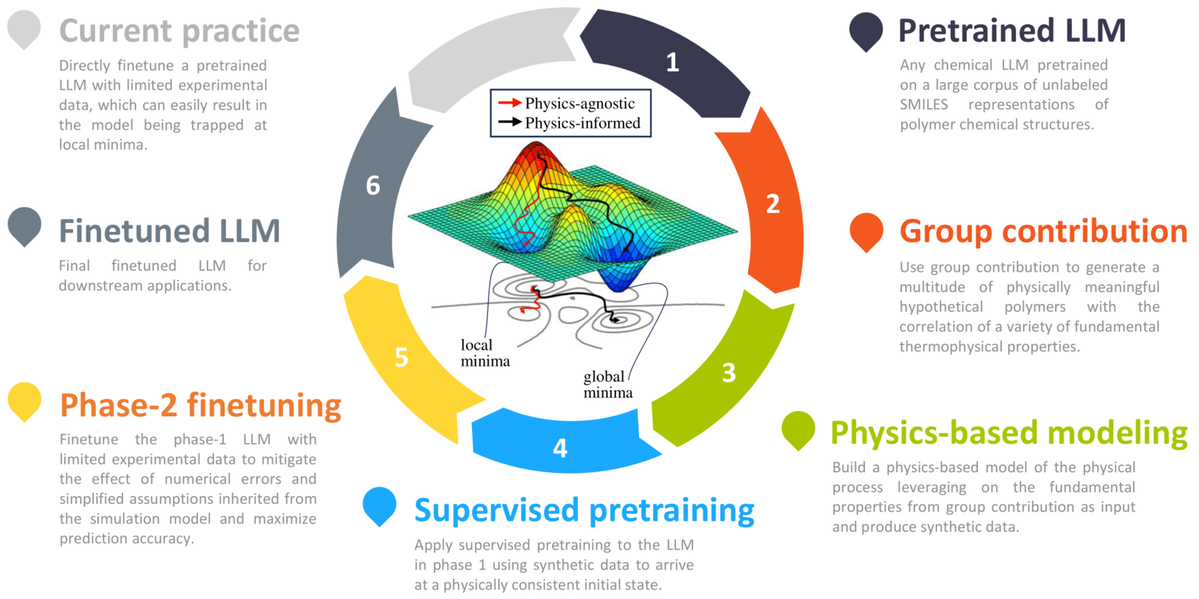

| Feb 10, 2025 | Happy to share that our paper Harnessing large language models for data-scarce learning of polymer properties has been published by Nature Computational Science. |

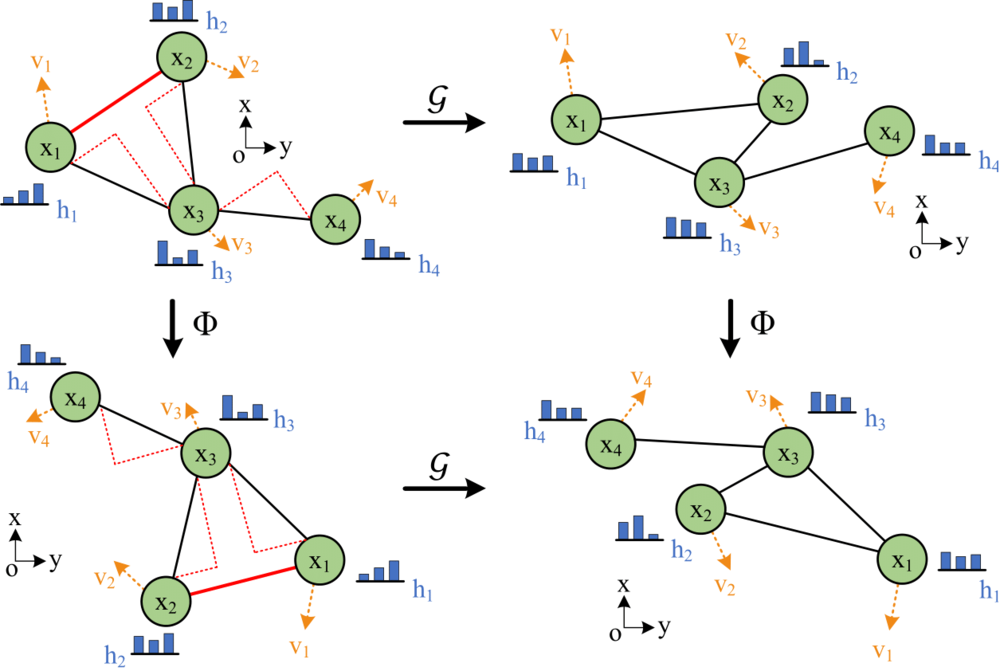

| Oct 03, 2024 | The preprint of our paper Disentangled Representation Learning for Parametric Partial Differential Equations is available on Arxiv. |

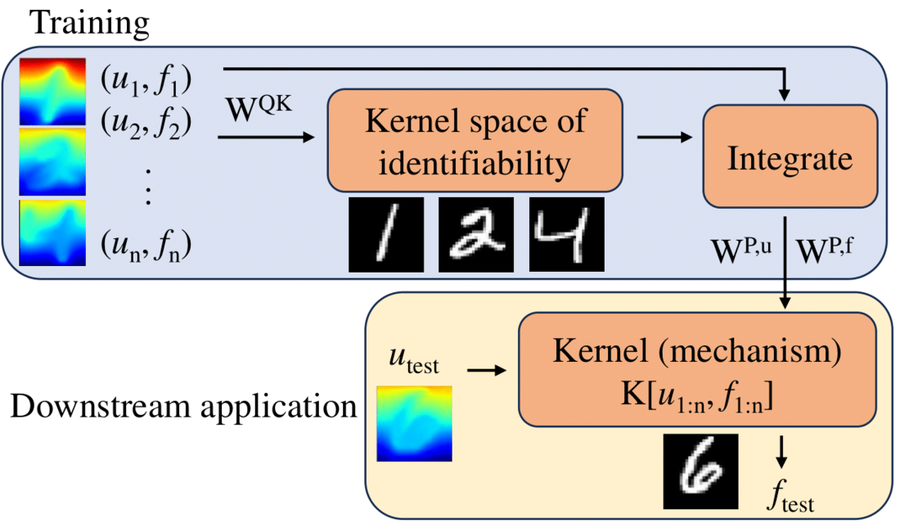

| Sep 25, 2024 | Our paper Nonlocal Attention Operator: Materializing Hidden Knowledge Towards Interpretable Physics Discovery has been accepted by NeurIPS2024 as a spotlight paper! |

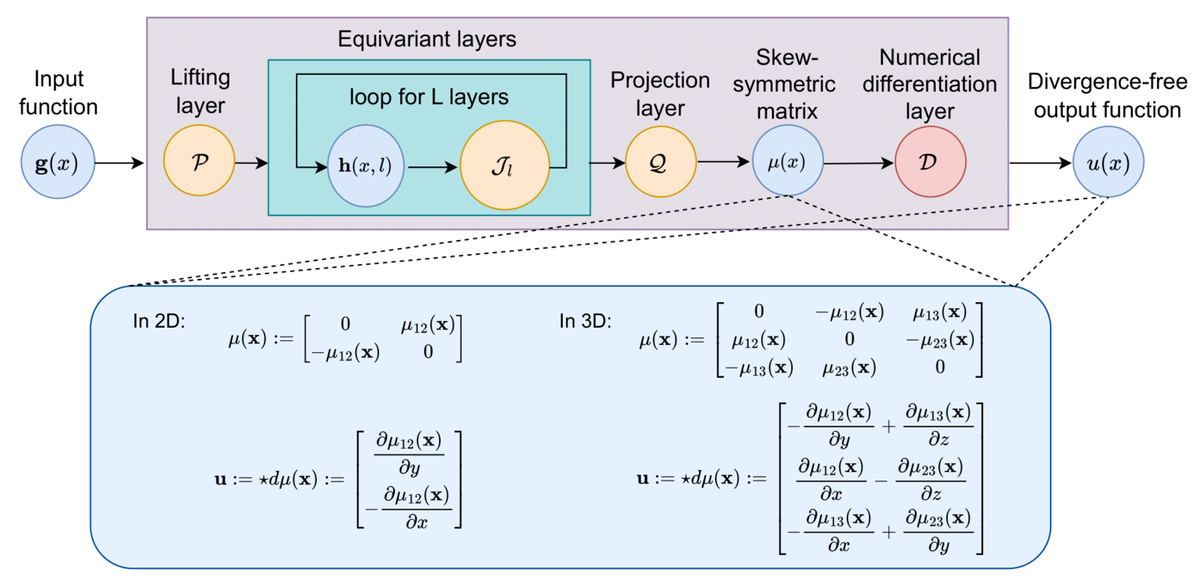

| May 01, 2024 | Our paper Harnessing the power of neural operators with automatically encoded conservation laws has been accepted by ICML2024 as a spotlight paper! |